“But what about DeepL?” Academic Texts and Machine Translation

I’ve lost count of how many times it’s happened to me – I tell someone I’m a translator, and they say “Oh, but what about DeepL?” While I believe their concern I might soon be out of a job is somewhat premature, it is undeniable that machine translation (MT) – more specifically, neural machine translation (NMT), which often is referred to as using artificial intelligence (AI) – has radically impacted the translation profession and will continue to change it over the years to come, probably in ways we can’t even imagine right now. So I thought a blog on the subject might be a useful way of offering readers some information and advice. This is not going to be a general blog about AI, its many problems, and the ethics of using it. (My personal take is that it is indeed deeply problematic, but it’s here to stay and we had better get savvy about it and its use.) Rather, I want to highlight a few practical issues with MT that are particularly relevant to academic translation.

Background[i]

When we speak of machine translation today, we are usually talking about neural machine translation. NMT employs a) vast amounts of text that are available in multiple language versions to produce translations based on the statistical frequency with which certain words or phrases occur in particular contexts, and b) neural networks, i.e. high-performance technological structures that are similar to the neural networks in the human brain. Compared to earlier forms of machine translation, NMT produces much better texts, albeit not uniformly and not without problems, as we will see. Nevertheless, its quality is such that it is often described as sounding the death knell of the translation profession. DeepL is the best-known NMT tool, and many translators now use a “professional version” that plugs into their standard software. Full disclosure: I often use this plug-in too as it saves time typing, even though I have yet to accept a single sentence it has suggested unedited! Based on my own experience, I believe that it will still be some time before professional human translators are fully replaced by machines in the academic field. In particular, I have noted that NMT struggles with the following (all examples given in my working languages German and English):

Consistency

When a human translates a text, they read the text as a whole. For example, if “die EU-Kommission” occurs in one sentence, they will realise that “die Kommission” mentioned a few sentences later likewise refers to the EU Commission and won’t translate the term “Kommission” as “committee”. MT will produce accurate-sounding target sentences, but it may well translate the same term differently from sentence to sentence because it simply doesn’t take the text as a whole into account (for all the talk of “intelligence”, it doesn’t actually understand the text). As such, careful revision by a human is required.

Terminology

Building on the previous point, MT is unable to recognise specialist terminology unless it occurs sufficiently frequently in a sufficiently great number of texts. Because it cannot recognise context (except in terms of statistics), it doesn’t know that “Geist” is usually translated as “spirit” when writing about Hegel and as “mind” when writing about Kant, and in a plethora of other ways depending on the subject-matter at hand. Again, the human touch is needed.

Quotes and references

Academic texts usually contain numerous quotes from and references to primary and secondary sources – indeed, academic translators spend an inordinate amount of time tracking down quotes in official, published translations! MT of course doesn’t recognise quotes and certainly isn’t able to search for existing published translations. You might think that DeepL would be able to handle frequently quoted primary material such as EU legislation, but my experience is that even here the tool simply produces its own translation. That translation may sound pretty good, but it’s not a new translation that is required here. Careful checking against the sources is imperative.

Long, complex sentences

As a basic rule, the shorter and clearer the sentences in the source text, the better MT is able to deal with them. Academic texts are of course not known for short and clear syntax, and German even less so than English! This is a big challenge for MT, which frequently assigns subclauses to the wrong grammatical subjects. In many cases this is quite obvious and easily corrected, but in some cases NMT produces very plausible-sounding sentences, the erroneousness of which only becomes clear at second glance. This NMT trait – the ability to produce sentences that sound good but are inaccurate translations – is yet another reason why editing by a human remains crucial. (This is something that NMT has in common with other text-generating tools such as ChatGPT, which delivers texts that read well but the content of which is unreliable.)

Obscure fields

As mentioned above, MT requires vast quantities of text, and its accuracy depends absolutely on the amount of material upon which it can draw. This means that it is able to produce reasonable translations in fields where there is a wealth of source material (such as law and medicine), but in others – music, for example, which is one of my areas of specialisation – it performs only poorly. It has no idea when “Satz” means “setting”, when it means “movement”, when it means “phrase” or “period”; it hasn’t a clue when “Choral” means “chorale” and when it means “plainchant”. I usually turn my DeepL plug-in off when working on music-related texts as it produces such gobbledegook that it’s quicker for me not to use it!

Register and different stylistic conventions

MT is not good at detecting any kind of linguistic nuance and often struggles to accurately render different registers. This is a particular problem when the register used in the source and target language differs slightly. While both German and English use a formal register in academic writing, English generally is still less formal than German, and the literal translations of scholarly texts from German into English produced by MT very often sound stilted. When translating academic texts from German, I spend a lot of time resolving nominalizations, changing vague passive constructions into more specific active phrases, and breaking long and convoluted sentences down into shorter, punchier ones – all techniques to create a more readable English text that (at least for now) are beyond the capacity of MT. I often suggest changes to the titles of articles, or to chapter or article headings, as German and English follow quite different conventions in this regard, while MT will produce a more literal and thus unfortunately less usable translation.

Historical language

MT also is unable to cope well with historical (i.e., non-contemporary) language, simply because it doesn’t have a large enough corpus of this language upon which to draw. The earlier the language, the less accurate (i.e. nonsensical) are the results. This is a major obstacle to using MT to translate academic texts that quote historical sources.

Confidentiality

This last issue is relevant to every AI-based tool. These tools use the material they are fed to learn and improve, and this self-learning function is of course one of their great advantages. But the material has then become part of the tool and is no longer private – for example, if you use a photo of yourself in Midjourney, there’s no guaranteeing that (parts of) your face won’t appear in somebody else’s image. The same applies to translation tools – if you feed in a text, that text may resurface in some form in someone else’s translation. There are ways of getting around this, of course – the professional version of DeepL deletes the texts it is used to translate immediately after translation. But I would be extremely cautious about using MT (even a “professional” version) for anything highly confidential.

So is machine translation a viable option for academic texts?

MT is certainly not at a stage where human intervention is no longer required. It is able to produce decent translations of straightforward texts, yes, but even these still need careful checking by a human. For longer, more complex texts, such as scholarly ones, MT at present produces mixed results that require meticulous editing with a particular eye to all the problems mentioned above – ideally by someone with some experience of the types of error MT makes. MT will no doubt become more sophisticated, and probably quite quickly, but I am doubtful that translations of highly specialised academic texts will be able to dispense with humans any time soon. What I believe will happen – or is already happening – is that more and more scholars will use MT tools to produce a first rough translation of their text, then turn to a translator to edit it. The general assumption is that this will be more cost-effective and quicker than getting a human to translate the text from scratch. Depending on the field in question, this may be true. But where a machine has produced a poor translation – and the likelihood of this is higher the more specialised and the smaller the field – this strategy will backfire, as it will take at least as much time to clean up the messy machine translation as it would have taken a human to translate the text. Nevertheless, as the pressure on university budgets increases, I expect we will see more and more academics turning to MT.

The added value of human academic translators

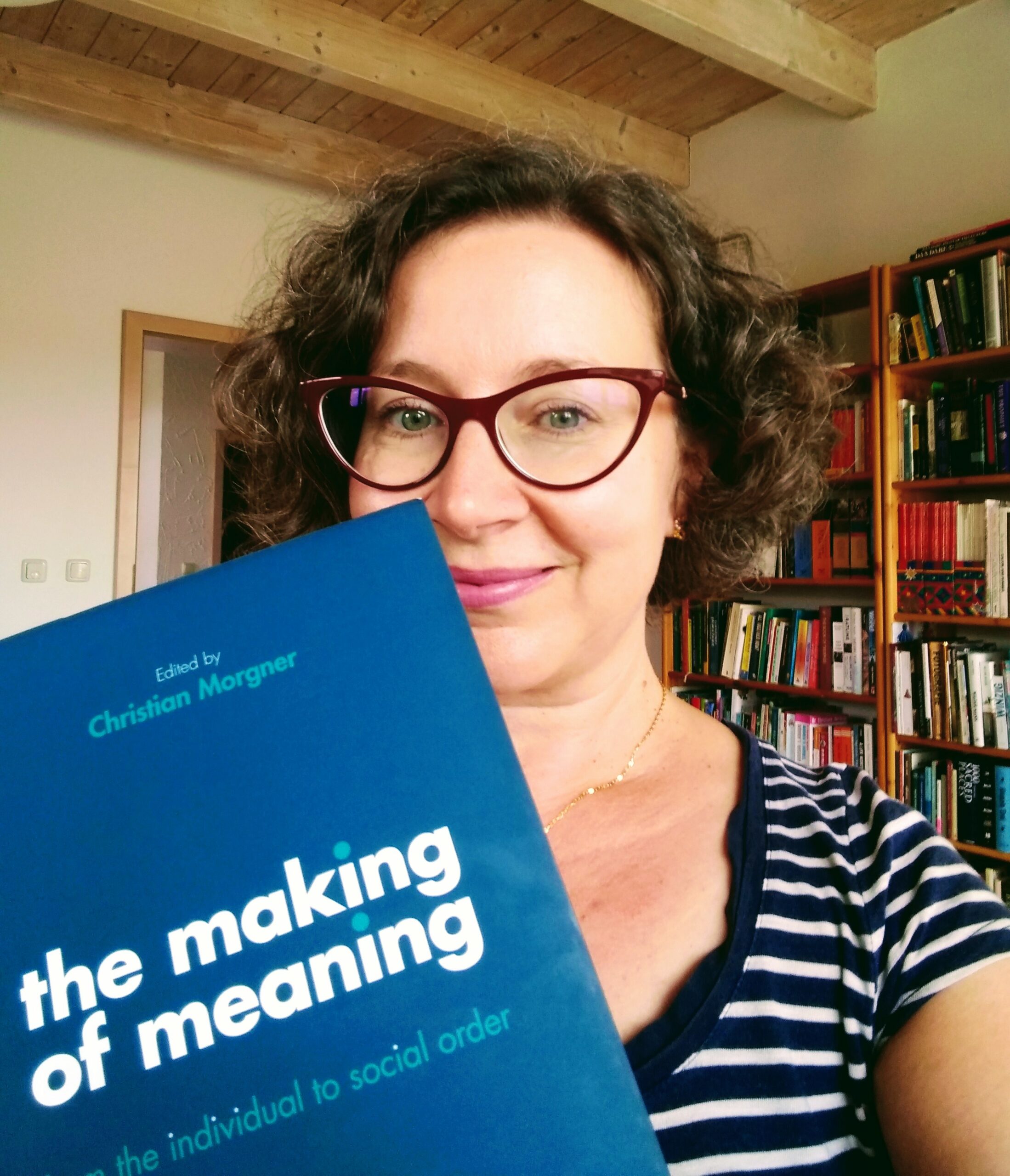

Human translators do far more than just translate. I already mentioned above that when producing a translation, I often make suggestions for alternative phrasings, titles, and even lines of argument. This is because I translate texts not just from one language into another language, but from one context into another context. As a human being familiar with both the Anglo-American and German-continental academic systems, I am keenly aware of the different values and conventions that apply in both traditions, and this awareness informs the decisions I make in my translations. This contextual knowledge means I am able to offer my clients a lot more than mere translation – for example, recently a client wanted advice on how best to structure a book proposal for a British publisher before sending me their text to translate; when translating grant applications, I’ve commented on what kind of information needs to go into them; when authors have quoted a German poem to make a particular point, I’ve suggested an English poem with a similar meaning that would achieve a similar recognition effect with English-language readers. I doubt very much that a machine will be able to provide this kind of added value at any point in the foreseeable future. Quite aside from this, as a human I am able to discuss tricky phrases and passages with clients so that we arrive at solutions that are satisfactory for both! When it comes to AI and MT, you should never say never, and so I don’t want to say that we academic translators will never become redundant – but I think we will definitely be around for a long time to come, even if our role shifts somewhat towards an editorial, advisory one.[ii]

——————

[i] For a more detailed account of the history and background of MT, as well as a discussion of some of the general key problems, I recommend reading my colleague Marc Prior’s excellent (German-language) article “Gedanken zur maschinellen Übersetzung”.

[ii] Jonathan Downie highlights this advisory or mediating role in his book Interpreters vs Machines: Can Interpreters Survive in an AI-Dominated World? (Routledge 2020). He is talking about interpreters rather than translators, but I think the argument still holds!